Sailorsaint

Guest

- Joined

- Mar 8, 2005

- Messages

- 14,283

- Reaction score

- 25,529

Online

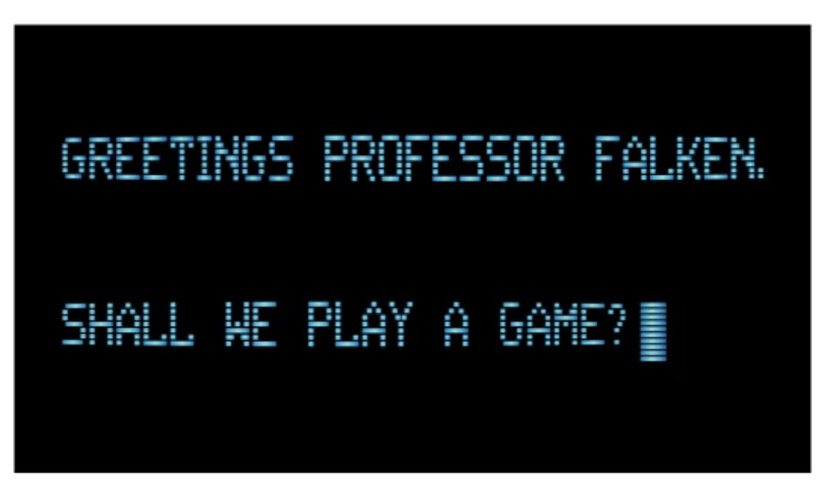

Will Smith movie, wasn't it? I think I kind of remember watching it.Ummm, did they not watch the 2005 movie, "Stealth"? That's basically the plot of the movie.....18 years ago.

Bonus picture from the movie in case any of you missed it.